Twitter, Facebook & the 2020 Election

By Christina Georgacopoulos | November 2020

What are the Social Media Giants doing to protect users from fake news and other online threats?

Social media platforms and tech companies are developing tougher user policies and methods for detecting and removing fake accounts and misleading or false information ahead of the 2020 election.

Facebook and Twitter’s CEOs are at odds as to what role its platforms should play in our politics, and have differed as to what free speech and First Amendment rights and protections should look like online.

Twitter is arguably taking a more aggressive approach in ferreting out disinformation and protecting users. It removes manipulated videos, flags misleading content, and is developing criteria for handling misleading or false tweets. Although Facebook has taken steps to secure its platform ahead of the 2020 election, it faces growing criticism that it is not doing enough to prevent racism, hate speech and manipulated or false media.

Here is a look at how the prominent social media companies are preparing for the 2020 election. This page will be updated according to changing policies and significant events related to how these companies are policing user and advertiser content.

Facebook, the hot bed of Russian election interference in 2016, is taking steps to ensure the platform won’t be weaponized again in 2020. In a memo released in late 2019, Facebook announced several initiatives to “better identify new threats, close vulnerabilities and reduce the spread of viral misinformation and fake accounts.”

Among them are:

- Combating inauthentic behavior with an updated policy on user authenticity

- Protecting the accounts of candidates, elected officials and their teams through “Facebook Protect”

- Making pages more transparent, which includes showing the confirmed owner of a page

- Labeling state-controlled media and their Pages in the Facebook “Ad Library”

- Making it easier to understand political ads, which includes a new U.S. presidential candidate spending tracker

- Preventing the spread of misinformation by including clear fact-checking labels on problematic content

- Fighting voter suppression and interference by banning paid ads that suggest voting is useless or that advise people not to vote

- Investing $2 million to support media literacy projects to help people better understand the information they see online

Facebook has reportedly removed over 50 networks of coordinated inauthentic behavior among accounts, pages and groups on the platform. Some of these coordinated disinformation networks were located in Iran and Russia.

According to Facebook’s “Inauthentic Behavior” policy, information operations are taken down based on behavior, not on what is said because much of the content shared through coordinated information operations is not demonstrably false, and would be acceptable public discourse if shared by authentic users. The primary issue at stake is that bad actors are using deceptive behaviors to promote an organization or particular content to make it appear popular and trustworthy.

- Inauthentic behavior occurs when a user misrepresents themself by using a fake account, often with the aim of misleading other users and engaging in behavior that violates Facebook’s Community Standards. Inauthentic behavior is considered when an individual simultaneously operates multiple accounts, or if one account is shared between multiple people. By harnessing the power of multiple accounts, a user can abuse reporting systems to harass other users, or artificially boost the popularity of some content. Concealing the identity, purpose, or origin of accounts, pages, groups or events or the source or origin of content is considered inauthentic behavior.

Facebook Protect is designed to safeguard and secure the accounts of elected officials, candidates and their staff. Participants who enroll their page or Facebook or Instagram account will be required to turn on two-factor authentication, and their accounts will be monitored for hacking, such as login attempts from unusual locations or unverified devices.

Facebook is ensuring that Pages are authentic and transparent by displaying the primary country location of any given Page, and whether the Page has merged with others, which gives more context as to the origin and operation of individual Pages.

Many users fail to disclose the organization behind their Page as a way to obscure ownership or make the Page appear to be independently operated. To address this issue, Facebook is requiring that organizations and Page owners are visible and contactable. Pages that run ads about social issues, elections or politics in the U.S. require registration and verification.

Facebook labels media outlets that are wholly or partially under the editorial control of a government. These Pages will be held to a higher standard because they combine the opinion-making influence of a media organization with the strategic power of a government.

To develop its own definition and standards for state-controlled media organization, Facebook executives sought input from more than 40 experts around the world who specialize in media, governance, human rights and development.

Facebook considers several factors that indicate whether a government exerts editorial control over content, such as:

- The ownership structure of the media outlet, such as owners, stakeholders, board members, management, government appointees in leadership positions, and disclosure of direct or indirect ownership by entities or individuals holding elected office

- Mission statements, mandates, and public reporting on how the organization defines and accomplishes its journalistic mission

- Sources of funding and revenue

- Information about newsroom leadership and staff

Facebook has made its Ad Library, Ad Library Report and Ad Library API to help journalists, lawmakers, researchers and ordinary citizens learn more about the ads they encounter.

This includes a spending tracker to see how much each candidate in an election has spent on ads, and making it clear whether an ad ran on Facebook, Instagram or somewhere else.

Facebook and Instagram reduce the spread of misinformation by reducing the distribution of disinformation via the Explore Feed and hashtags. Content from accounts that repeatedly post misinformation is filtered to prevent appearance on the Explore Feed, or Facebook will place restrictions on the pages ability to advertise and monetize.

Content on both Facebook and Instagram that has been rated false or partly false by third-party fact-checkers, who are certified through the non-partisan International Fact-Checking Network, will be prominently labeled so users can decide credibility for themselves.

Facebook prohibits content that misrepresents the dates, locations, times and methods for voting or voter registration, as well as misinformation about who can vote, qualifications for voting, whether a vote will be counted, and threats of violence related to voting, voter registration or the outcome of an election.

Facebook’s Elections Operations Center removed more than 45,000 pieces of content that violated these policies, more than 90% of which their system detected before the content was reported.

Facebook’s hate speech policy bans efforts to exclude people from political participation on the basis of race, ethnicity, or religion. Additionally, Facebook has banned paid advertisements that suggest voting is useless or meaningless, or that advises people not to vote. Facebook employs machine learning to identify potentially incorrect or harmful voting information.

Facebook invested $2 million to support projects that promote media literacy. The platform provides a series of media literacy lessons in its Digital Literacy Library. The lessons, created for middle and high school educators, are designed to cover topics ranging from assessing the quality of information to technical skills like reverse image search.

Facebook Still Allows Micro-Targeting of Political Ads

In October 2019, Facebook CEO Mark Zuckerberg described the ability of ordinary people to engage in political speech online as “...a new kind of force in the world -- a Fifth Estate alongside the other power structures of society.”

In stark contrast with Twitter, which prohibits political advertising, Facebook has adopted a “hands-off” policy when it comes to policing who buys political ads and what they ultimately say. The platform's approach “is grounded in Facebook’s fundamental belief in free expression, respect for the democratic process and the belief that, in mature democracies with a free press, political speech is already arguably the most scrutinized speech there is,” according to Facebook’s head of global elections policy.

But Facebook's hands-off policy is not without controversy. In late June 2020, a large number of companies threatened to boycott paid advertising on Facebook and Instagram to show support for a movement called #StopHate4Profit.

Facebook faced heavy criticism in early June for allowing posts by President Trump to remain on the platform that many say were "glorifying violence" related to the George Floyd protests. Twitter attached a warning label to the controversial Tweet sent out by the president, which read "when the looting starts, the shooting starts," but Facebook said the post did not violate its rules.

The Anti-Defamation League, the NAACP, along with other organizations Sleeping Giants, Color of Change, Free Press, and Common Sense, asked “large Facebook advertisers to show they will not support a company that puts profit over safety” in response to “Facebook’s long history of allowing racist, violent and verifiably false content to run rampant on its platform.” The groups say Facebook is used in “widespread voter suppression efforts, using targeted disinformation aimed at black voters,” and “allowed incitement of violence to protesters fighting for racial justice in American in the wake of George Floyd, Breonna Taylor, and others.

Zuckerberg responded that the platform would not change its advertising policy "because of a threat to a small percent of our revenue, or to any percent of our revenue." Shortly after the campaign launched in early June, Facebook lost $60 billion in market value and nearly 500 companies had pledged to boycott advertising on the platform.

In stark contrast with Facebook, Twitter CEO Jack Dorsey announced in October 2019 that political ads are banned from its platform, saying “We believe political message reach should be earned, not bought.” He added that “Internet political ads present entirely new challenges to civic discourse: machine learning-based optimization of messaging and micro-targeting, unchecked misleading information, and deep fakes.”

In early February 2020, Twitter announced new rules addressing deepfakes and other forms of synthetic and manipulated media. Twitter will not allow users to “deceptively share synthetic or manipulated media that are likely to cause harm,” and will start labeling tweets containing synthetic or manipulated content to provide more context.

Twitter is using three criteria to consider Tweets and media for labeling or removal:

- Whether the content has been substantially edited in a manner that fundamentally alters its composition, sequence, timing, or framing

- Any visual or auditory information has been added or removed, such as new video frames, overdubbed audio, or modified subtitles

- Whether media depicting a real person has been fabricated or simulated

- Whether the context could result in confusion or misunderstanding or suggests a deliberate intent to deceive people about the nature or origin of the content

- Assess the context by using metadata associated with the media, information on the profile or the person sharing the media, or website linked in the profile of the person sharing the media

- Including threats to physical safety of a person or group

- Risk of mass violence or widespread civil unrest

- Threats to the privacy or ability of a person or group to freely express themselves or participate in civil events, such as: stalking, targeted content such as tropes, epithets, or material that aims to silence someone, and voter suppression or intimidation

In response to violating Twitter's policy of manipulated media, the platform will:

- Apply a label to the tweet in question

- Show a warning to users before they retweet or like the post

- Reduce the visibility of the tweet on Twitter and/or prevent it from being recommended

- And provide additional explanations or clarifications if available

The policy can target “cheapfakes,” or relatively low-tech media manipulation, such as the doctored video of Democratic House Speaker Nancy Pelosi that circulated last year. The video, which was simply slowed down, appeared to show Pelosi slurring her speech. People accused her of being drunk and took aim at her mental state. Despite being doctored, Facebook decided to not remove the video. But YouTube, which is owned by the parent company of Google, removed the video for violating the platform’s policies.

In late 2019, Twitter threatened to hide tweets of world leaders behind a warning label if their messages incited harassment or violence. Twitter also said it would mark tweets containing misinformation with labels that link to sites with reputable information.

For the first time in late May 2020, Twitter added links on two of President Trump’s tweets, urging Twitter users to “get the facts.” The added links to Trump’s tweets came after years of pressure on Twitter over its inaction on the president’s false or threatening posts. But some are criticizing Twitter for what appears to be a lack of consistency in enforcing its policies.

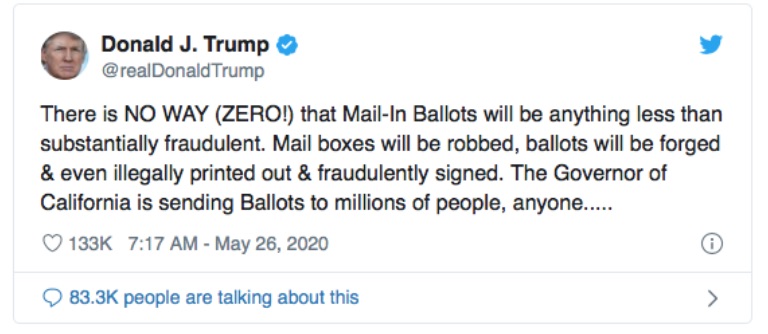

Twitter flagged two of Trump’s tweets that contained inaccuracies about mail-in ballots, claiming there is no way “that Mail-In Ballots will be anything less than substantially fraudulent.” Previously, Twitter stated Trump’s tweets did not violate the platform’s terms of service.

Twitter and Facebook acted in solidarity in June 2020 to remove a doctored video posted by President Trump that showed two toddlers, one white, one Black, running down a sidewalk with a fake CNN headline that read: “Terrified toddler runs from racist baby.” Facebook removed the video over a copyright complaint, which eventually prompted its removal on Twitter. However, initially Twitter took a stronger, more pointed stance by labeling the video manipulated media per its policy.

The platforms removed the deceptive footage after a copyright complaint from one of the children’s parents, according to a CNN report. Trump used the video, which went viral last year, to suggest CNN had manipulated the context of the video to stoke racial tensions.

Trump To Limit Social Media Legal Protections

The executive order, signed on May 28, states that the “growth of online platforms in recent years raises important questions about applying the ideals of the First Amendment to modern communications technology.” When presenting the order, Trump said a “small handful of powerful social media monopolies control the vast portion of all private and public communications in the United States.”

The order takes aim at a 1996 law passed by Congress that protects internet companies from lawsuits over content that appears on their platforms. Overturning 25 years of judicial precedent by revoking section 230 of the Communications Decency Act will end liability protections for social media platforms and make them responsible for the speech of billions of users around the world post on the sites.

- Section 230 states that "No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider" (47 U.S.C. § 230). Essentially, Section 230 protects social media platforms from objectionable speech posted by its users because online intermediaries of speech are not considered publishers.

Legal experts describe the executive order as “political theater,” and that it does not change existing law and will have no bearing on federal courts.

Twitter CEO Jack Dorsey responded to Trump’s regulation threats, saying “Our intention is to connect the dots of conflicting statements and show the information in dispute so people can judge for themselves. More transparency from us is critical so folks can clearly see the why behind our actions.”

In contrast, Facebook CEO Mark Zuckerberg responded that social media companies should stay out of fact checking, saying that “private companies probably shouldn’t be… in the position of doing that.” Facebook refrains from removing content because, according to Zuckerberg, “our position is that we should enable as much expression as possible unless it will cause imminent risk of specific harms or dangers spelled out in clear policies.”

In a move that echoes President Trump’s executive order, the Justice Department announced it is proposing legislation to curtail legal protections for social media platforms for the content that appears on their sites. The proposal is said to “update the outdated immunity for online platforms,” and incentive platforms to act responsibly. The department's recommendations fall into three general categories:

- provide online platforms incentives to address illicit content;

- clarify federal powers to address unlawful content;

- and promote open discourse and greater transparency

Read more about the debate surrounding Section 230 and the Communications Decency Act here.

For more resources and articles on the future of election interference visit our resources pages.

To learn how to spot fake news visit our "Protect Yourself" page.