Deepfakes

By Madison Latiolas | January 2021

Photo: Yuezun Li and Siwei Lyu, "Exposing DeepFake Videos By Detecting Face Warping Artifacts." New York State University at Albany. 1 Nov. 2018.

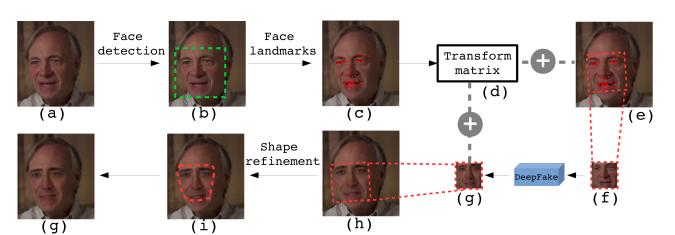

This graphic shows the process used when creating a deepfake. The facial movement and major features are detected and put into a transformation matrix. The face shape is then refined and replaces the original.

Photo: Yuezun Li and Siwei Lyu, "Exposing DeepFake Videos By Detecting Face Warping Artifacts." New York State University at Albany. 1 Nov. 2018.

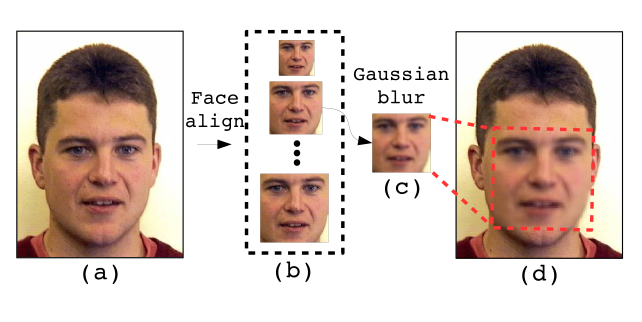

This diagram displays how Gaussian blur, a result of deepfake facial alignment, occurs. Photo A is the original and photo B is the process of facial alignment. Photos C and D show the altered photo with Gaussian blur. Gaussian blur is a tell-tale sign of an altered photo or video.

For more information about Deepfakes, visit our Deepfake Resources page.