Institutional & Governmental Response to Misinformation

By Trey Poché | July 2020

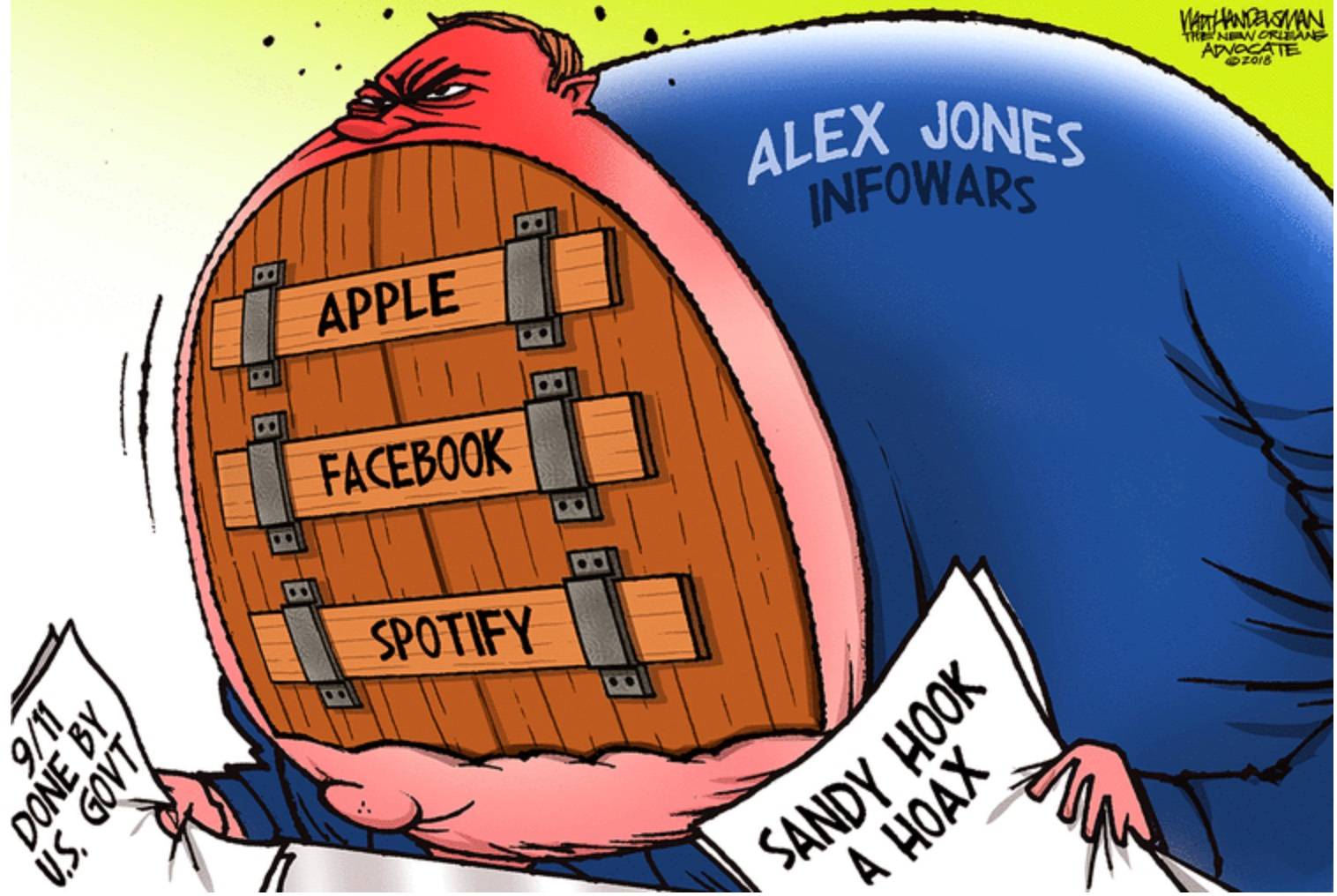

While governments have always combated conspiracies and misinformation, today’s challenge lies in the fact that social media and new media multiply the number of distribution channels. These information channels can allow greater access to political news, but they allow bad actors to easily publish without regard for journalistic practices. Today, many online “news” sites deal in conspiracy theories and convenient misinformation to advance political goals. Institutions and governments are weighing whether to respond.

Governments are taking differing approaches to curbing the spread of online conspiracies and disinformation: some with little action, others with authoritarian infringements on freedom of speech. In 2018, the High Level Expert Group on Fake News and Online Disinformation released its final report to help the European Union craft policies on digital misinformation.

Notably, China, Kazakhstan and Saudi Arabia have taken authoritarian approaches. Regarding misinformation, China has some of the strictest laws in the world, where online commentary is surveilled so that information does not “undermine economic and social order.” Kazakhstan, rated “not free” for press freedom by Freedom House, criminally investigated two news outlets that accused top government officials of corruption. Saudi Arabia, after the murder of Washington Post’s Jamal Khashoggi, began issuing threats to people that post “fake news.”

While these illiberal countries took heavy-handed measures to protect their regimes, democratic nations around the world have responded. Instead of attempting to stifle good, professional journalism, democratic nations seek to reign-in patently false conspiracy theories and misinformation.

In 2018, France passed a law allowing the government to remove fake stories on social media and requiring platforms to disclose the identity of those who run advertisements on their platforms. These powers are available to French authorities for the three months leading up to an election, when foreign intervention can be most damaging.

In 2019, The United Kingdom’s cabinet secretaries announced that they would provide guidance for teaching media literacy to their students. Additionally, the National Security Communications Unit is currently in place to combat and deter states and individuals from undermining national security. Sweden’s approach is unique in that it aims to promote factual content, rather than “directly fight false or misleading information,” according to the Poynter Institute.

The United States began seriously considering online disinformation in the wake of its 2016 presidential election. Intelligence agencies confirmed that Russia meddled in American social media through Facebook advertisements and troll accounts to influence the outcome of the election. Since 2016, the U.S. Congress passed a bill to require companies, such as Facebook and Google, to keep tabs of those who buy ads from them. Lawmakers quizzed tech executives about the disinformation spread during the election. In addition to mounting federal pressure, a few states have considered legislation. California passed a law which requires media literacy resources to be available to students. Washington state is considering a media literacy bill, and Massachusetts successfully passed a bill so students can better “access, analyze and evaluate all types of media,” according to Media Literacy Now.

Educational media literacy measures, while they do not directly curb the flow of misinformation, are indispensable to the goal of having an educated citizenry. Citizens who are taught media literacy skills can choose to not engage with conspiracy theories and misinformation, and can instead consume news from reputable sources.

A 2019 NBC News/Wall Street Journal poll found that 55 percent of Americans believed social media does more to spread lies and falsehoods versus only 31 percent who said it does more to spread news and information. While social media companies have mostly escaped government action, the same poll found that 54 percent of Americans did not believe that the federal government was doing enough to oversee and regulate social media. In the absence of tighter governmental regulation, tech companies such as Facebook, Google and Twitter are imposing standards on their content in order to curb the sharing of misinformation on their platforms and improve their reputations. These standards include addressing political advertisements and bot accounts, sometimes by relying on fact-checkers and content moderators.

According to CNET, the tech news site, “Facebook, Twitter and Google each handle political advertising differently. Facebook doesn't send ads from politicians to fact-checkers but includes them in a public database. It also limits the amount of political ads people see on the social network. Twitter has the most restrictive approach, banning political ads with a handful of exceptions. Google, which owns YouTube, allows political campaigns to target people based on age, gender and postal code, but not on voters' political affiliations or public voter records.” Recently, Facebook employees banded together and sent a letter to CEO Mark Zuckerberg requesting six policies that Facebook could implement to prevent civic misinformation in political ads. However, Zuckerberg doubled-down, calling for more free speech, not less.

Bot accounts, non-human accounts that can “amplify, manipulate and replicate tweet engagement” are another source of misinformation. Wired reported that bot accounts can account for over-half of the conversation around specific political topics on Twitter, raising questions about the notion that Twitter dialogue reflects public opinion. Twitter is considering adding an identifying marker so that Twitter users can distinguish between bot and human content. Some action to help social media users identify bot accounts would be a welcome addition, considering the role bot accounts played in the 2016 election.

Over the past few years, Facebook and YouTube (Google) have used machines and human moderators to remove content not in-line with community guidelines. However, most of the moderation is geared towards violence, nudity, illegal drugs and terrorism, rather than political news misinformation. It’s not for a lack of personnel that political misinformation is not tightly regulated; YouTube employs 10,000 people while Facebook employs 35,000 to regulate content. Facebook, whether because of high moderation costs or a hard-line belief in freedom of speech, is not enthusiastic to regulate its flow of misinformation.

Most recently, the COVID-19 outbreak has brought with increasing moderation and fact-checking to meet the accompanying surge of misinformation and conspiracy theories. The public health risks associated with virus misinformation were so high that Google, Twitter and Facebook all announced that they would remove dangerous content about COVID-19, a move that showed their willingness to moderate at least some political content.

Still, the reason many social media platforms take a “hands off” approach to misinformation is Section 230 of the 1996 Communication Decency Act. Section 230 shields third-party platforms (Facebook, Twitter and Instagram) from liability for user-generated content. Platforms, intent to keep the law unchanged, now face pressure from both political parties that want to change Section 230. Republicans want to ensure social media has “neutral” political content, and Democrats are concerned about disinformation, hate-speech and election interference. It is not clear how a regulatory consensus will be reached.

The issues of freedom of the press and combating misinformation run through the debate about social media platforms’ community standards and content policies. Because Americans now get their news from social media, it becomes increasingly important to consider what news flows to the public today. Is the news trusted and fact-based, or does it come from a bad actor?

Any regulation of these social media platform policies should be carried out with a balanced approach that protects civil discourse from misinformation and upholds the freedom of the press. In the absence of state regulation, social media platforms should further commit to meet the threats of misinformation and conspiracies, especially in an election year.